Pool Scaling

Adjust NodeGroup capacity by scaling nodes up or down based on workload demands.

Scaling Operations

Scale Up - Add nodes to increase capacity Scale Down - Remove nodes to reduce capacity and costs Right-sizing - Adjust size to match usage patterns

Scaling Constraints

- Minimum: 1 node for worker NodeGroups

- Maximum: 10 nodes per NodeGroup

- NodeGroup must be in "Ready" state

- Subject to vCloud quota limits

NodeGroup Configuration

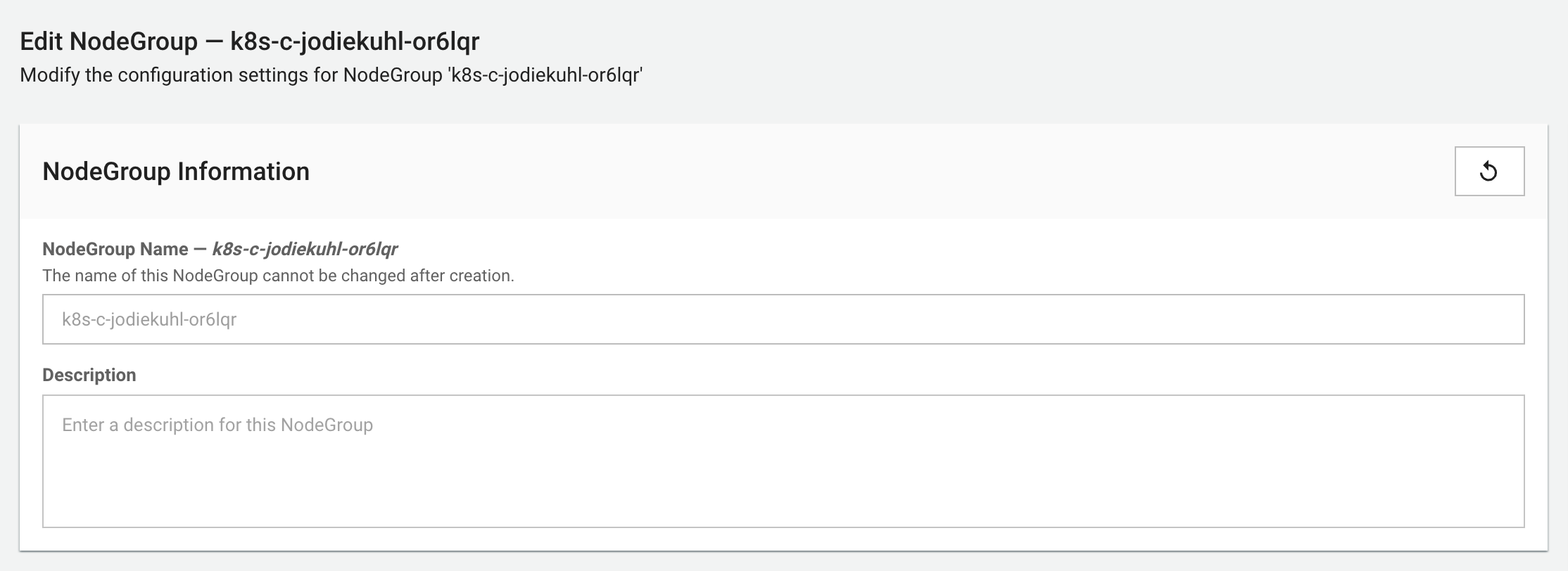

Basic Information

When editing a NodeGroup, you can modify basic configuration settings including name and description.

NodeGroup edit interface showing basic information and description fields

NodeGroup edit interface showing basic information and description fields

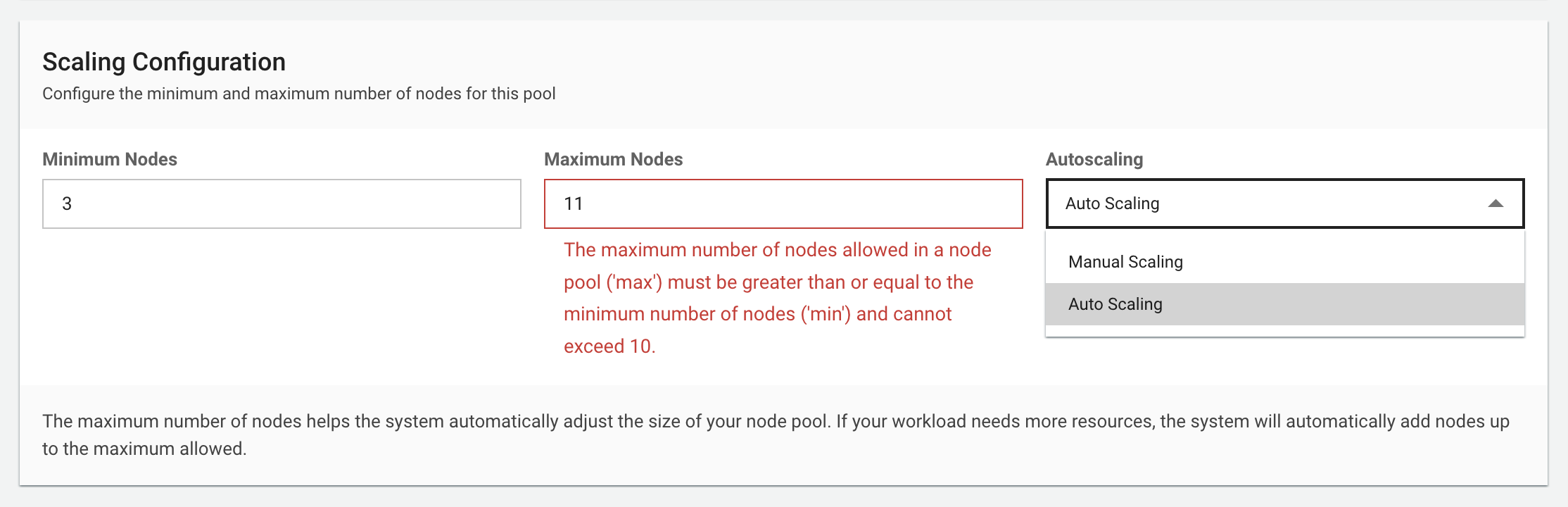

Scaling Configuration

Configure the minimum and maximum number of nodes for scaling operations. The system validates that maximum nodes cannot exceed 10 and must be greater than or equal to minimum nodes.

Scaling configuration interface with minimum/maximum nodes and autoscaling options

Scaling configuration interface with minimum/maximum nodes and autoscaling options

Configuration Options:

- Minimum Nodes: Set the minimum number of nodes (cannot be less than 1)

- Maximum Nodes: Set the maximum number of nodes (cannot exceed 10)

- Autoscaling: Choose between Manual Scaling and Auto Scaling modes

- Validation: Real-time validation ensures proper configuration

Advanced Configuration

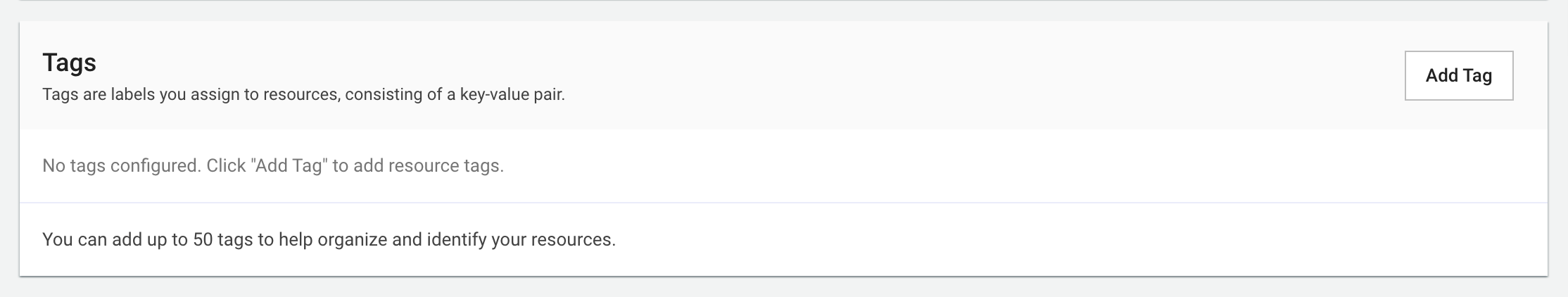

Resource Tags

Add tags to organize and identify your NodeGroup resources. Tags are key-value pairs that help with resource management and billing.

Tags configuration interface for resource organization and identification

Tags configuration interface for resource organization and identification

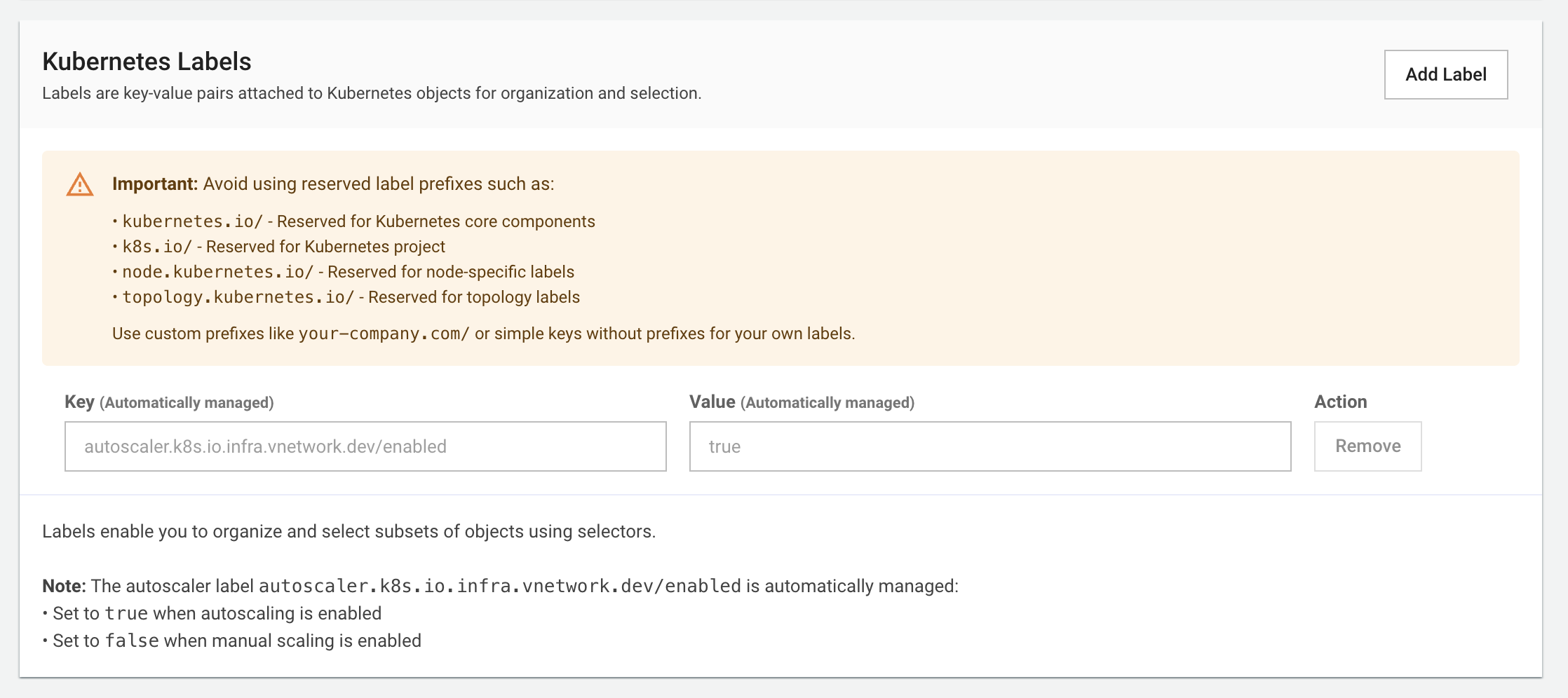

Kubernetes Labels

Configure Kubernetes labels for node organization and pod scheduling. The autoscaler label is automatically managed by the system.

Kubernetes labels configuration including autoscaler settings

Kubernetes labels configuration including autoscaler settings

Important Label:

autoscaler.k8s.io.infra.vnetwork.dev/enabled: Automatically managed for autoscaling- Set to

truewhen autoscaling is enabled - Set to

falsewhen manual scaling is enabled

- Set to

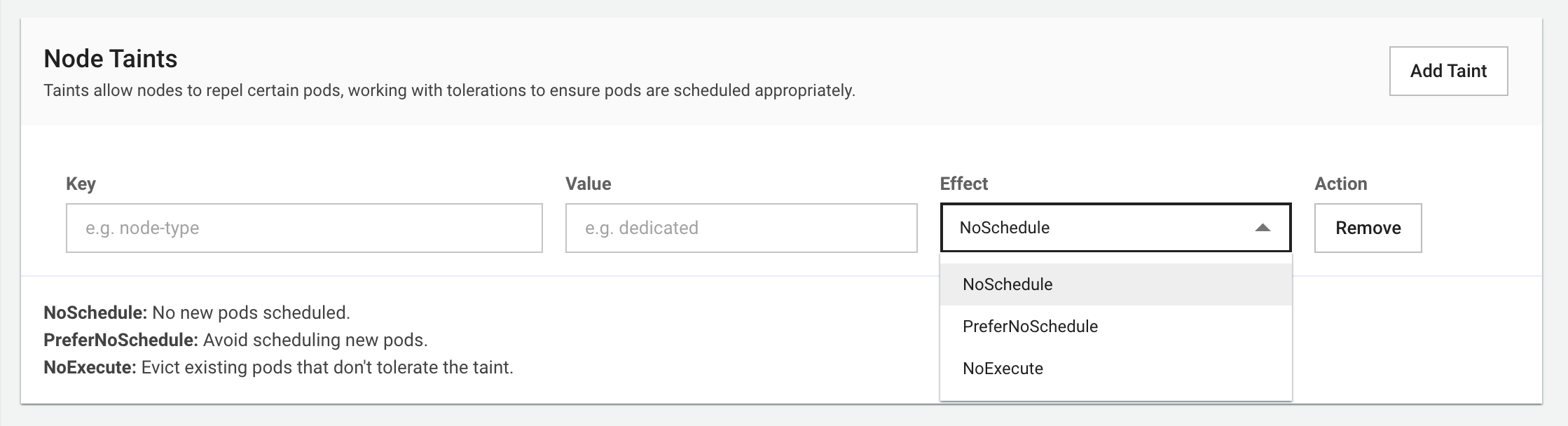

Node Taints

Configure node taints to control pod scheduling and ensure workloads are placed on appropriate nodes.

Node taints configuration for controlling pod scheduling

Node taints configuration for controlling pod scheduling

Taint Effects:

- NoSchedule: Prevents new pods from being scheduled

- PreferNoSchedule: Avoids scheduling new pods when possible

- NoExecute: Evicts existing pods that don't tolerate the taint

Scaling Process

- Access NodeGroup: Navigate to your cluster and select the NodeGroup

- Edit Configuration: Click edit to access scaling settings

- Adjust Limits: Modify minimum and maximum node counts

- Configure Autoscaling: Choose between manual or automatic scaling

- Apply Changes: Save configuration to enable new scaling limits

When to Scale

Scale Up

- Increased workload demands

- Performance bottlenecks

- Capacity planning

- High availability requirements

Scale Down

- Cost optimization

- Low resource utilization

- Maintenance activities

- Resource reallocation